Table of Contents

- Introduction

- Understanding the Microservices Architecture

- Key QA Challenges in Microservices Architecture

- QA Strategies for Microservices

- Best Practices for QA in Microservices

- Tools and Technologies

- Case Studies and Real-World Examples

- Conclusion

Introduction

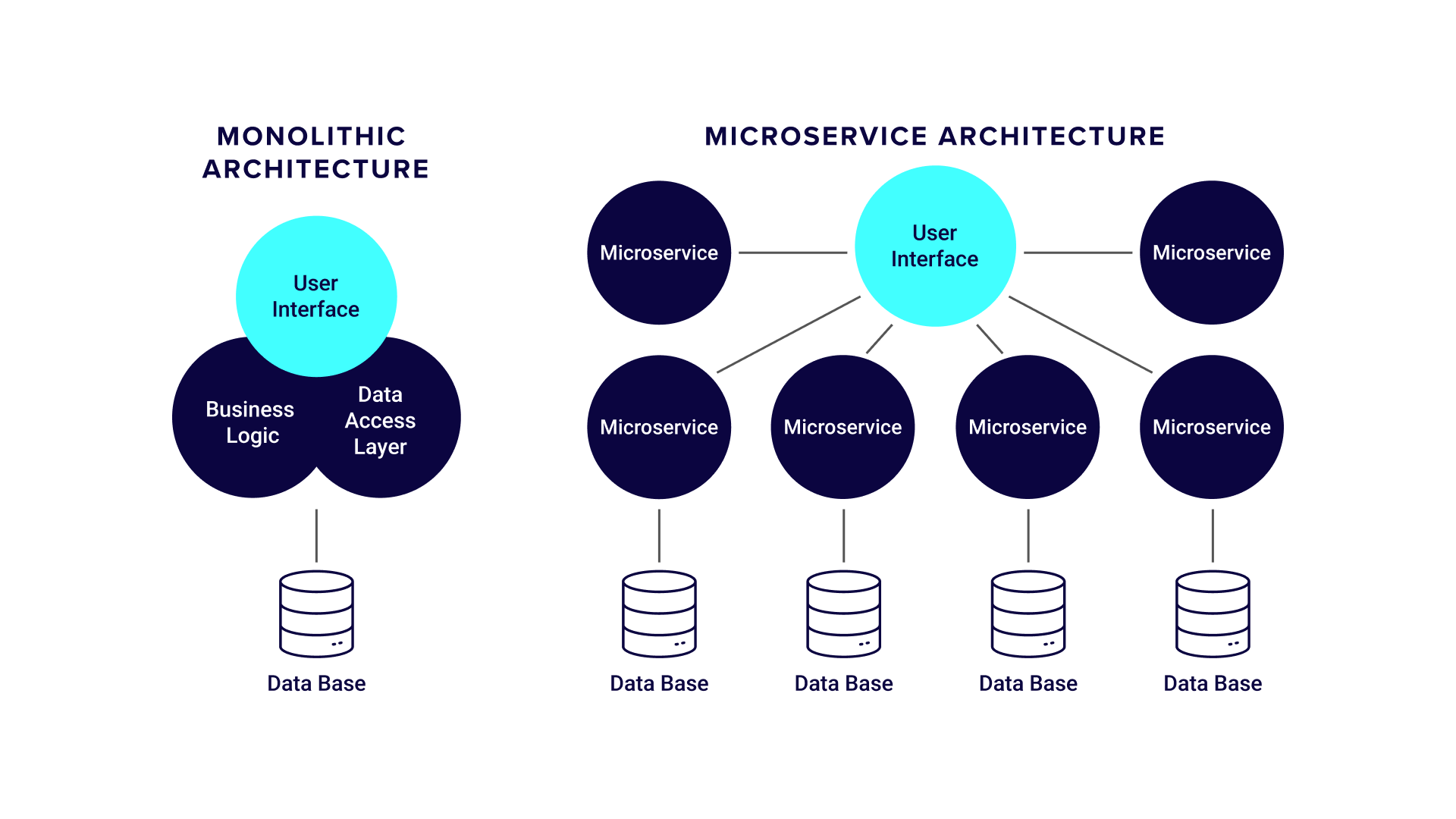

- Overview of Microservices Architecture: Microservices Architecture (MSA) has changed the worldview for utilizing and designing software applications within the cutting-edge world. Not at all like monolithic designs, which separate an application into larger, autonomous services that can be made, deployed, and scaled autonomously, a microservice architecture keeps things more sensible. Each benefit frequently performs a specific trade work, interfaces over a network, and directs a particular workforce. System Testing Services play a critical role in ensuring the seamless interaction of these distributed components, validating their integration, and maintaining overall application stability. Decentralized methodologies are getting to be progressively prevalent since they offer the potential for expanded adaptability, faster deployment times, and self-managing groups.

- Importance of QA in MSA: Ensuring that every microservices application not only runs without a hitch on its own but also seamlessly connects to other services is made possible using quality assurance. Testing is made more difficult and complex by the distributed nature of microservices. The success of a microservices-based system hinges on the QA team's capacity to overcome these obstacles and guarantee that the app offers a trustworthy and smooth experience.

Understanding Microservices Architecture

What is Microservices Architecture?

A plan technique known as microservices architecture partitions an application into a few loosely connected, autonomously deployable services. Each microservice can be created, tested, and deployed autonomously of other microservices and is aiming to fulfill a specific business purpose. In contrast, monolithic architectures comprise of a single deployed unit that's interconnected with each other component. Because of their fault-tolerant, versatility, and straightforwardness of maintenance, microservices provide a level of adaptability that monolithic applications usually don't.

Benefits of Microservices

- Scalability: Services can be scaled autonomously based on a request, allowing for effective use of assets.

- Flexibility: Distinctive innovations and languages can be used for distinctive services, empowering groups to select the leading tools for the work.

- Faster Time-to-Market: Teams can work on diverse services at the same time, speeding up development and deployment cycles.

- Resilience: Failures in one service don't essentially bring down the complete application, upgrading in general system reliability.

Key QA Challenges in Microservices Architecture

- Increased Complexity: In a microservices design, the multitude of independent services makes testing considerably more difficult. The two primary areas that QA teams must ensure are the independent functioning of every service and the smooth, error-free interactions between them. An additional layer of complexity arises from the fact that services are sometimes built by several teams, leading to variations in coding standards, testing procedures, and toolkits.

- Service Dependency Management: Several dependencies must be managed when testing microservices. One service's modification may have an impact on other services. Stubs and mocks are used to mimic the behaviour of dependent services, and careful planning is needed to manage these dependencies during testing. Assuring seamless interoperability among all services is a major task.

- Data Consistency Issues: Data in a microservices architecture is habitually dispersed over a few services, each of which has it possess database or storage system. Keeping up information consistency between different services can be troublesome, especially when real-time communication is required. To address circumstances where information may quickly end up out of match-up, QA teams must be compelled to put methods in place for testing inevitable consistency.

- Environment Configuration: It is imperative in microservices architecture to uphold uniform settings for development, testing, and production. Hard-to-debug problems may arise from configuration differences. For testing environments to be automated, configuration management technologies must be utilized, and environments must be standardized, according to QA teams.

- Performance Testing: The distributed nature of a microservices architecture makes performance testing difficult. The system's ability to withstand peak traffic without experiencing performance degradation is something that QA teams must verify by testing how services function both individually and collectively under stress. An important part of performance testing in MSA is locating bottlenecks and making sure services can scale properly.

QA Strategies for Microservices

- Service-Level Testing: Service-level testing, or testing individual services independently, is the cornerstone of quality assurance in microservices architecture. This comprises component testing, which verifies that the service operates as intended overall, and unit testing, which tests the tiniest portions of the code. To replicate dependencies and isolate the service being tested, mocking and stubbing are essential at this point. QA teams can detect problems early on before they affect other services by concentrating on service-level testing.

- Integration Testing: Interactions between services are the focus of integration testing. It's critical to confirm that services can cooperate as intended because microservices mostly rely on APIs for communication. Contract testing - which can be done with tools like Pact - is especially helpful for microservices because it guarantees that the contracts, which are agreements between services, are upheld, which helps to avoid integration problems when services change.

- End-to-End Testing: Inside the context of microservices, end-to-end testing is the strategy of testing an application from the user's point of view. This type of testing is more troublesome since MSA requires synchronizing various providers. QA teams must guarantee that the complete program capacities are appropriately designed to handle information and user intuitive over various services. Coordination and cautious test information management are critical to effective end-to-end testing in a microservices architecture.

- API Testing: Microservices frequently use APIs for communication, hence API testing is an essential component of quality assurance. Ensuring that APIs are flexible, dependable, and properly documented is essential for QA teams to manage a wide range of scenarios, including fault circumstances and edge cases. Teams frequently automate and expedite the testing process for APIs by using tools like Postman, Swagger, and SoapUI.

- Automated Testing: Microservices require automation due to their quick updates and deployments. Integrated into the CI/CD pipeline, automated tests allow teams to ensure that new code doesn't conflict with existing features and can help find issues early on. The tools and automated testing frameworks that support the microservices model must be properly chosen. Selenium is used for user interface testing, whereas JUnit is used for unit testing.

Best Practices for QA in Microservices

1. Shift-Left Testing: Early integration of testing into the development lifecycle is known as "shift-left testing." This strategy lowers the cost of problems and improves overall quality in microservices by helping to discover and fix issues sooner. Early QA involvement helps teams make sure that quality is ingrained in every service from the outset.

2. CI/CD Integration: The continuous integration and continuous deployment, worldview contains a noteworthy effect on microservices design. Integrating testing into the CI/CD pipeline permits teams to automate the testing process and guarantee that services are completely tested some time recently they are published. In expansion to bringing down the possibility of publishing defective code, this permits for faster and more dependable releases.

3. Microservices Testing Pyramid: The testing pyramid for microservices outlines a testing methodology where most tests are unit tests and end-to-end tests are less common. With a focus on quick input at the bottom and thorough validation at the top, this method guarantees effective and scalable testing.

4. Service Virtualization: Through the modelling of dependent services' behavior, service virtualization enables QA teams to test services independently. When testing multiple microservices at once, it might be difficult because of dependencies and availability problems. This is where it's helpful. Using tools like as WireMock, teams may build virtual services just for testing, preventing testing from being impeded by unavailable services.

5. Monitoring and Logging: Ensuring quality assurance in microservices requires strong monitoring and logging. When problems arise, recording offers important information about what went wrong. Monitoring tools aid in tracking the condition and effectiveness of services. By putting in place thorough monitoring and logging procedures, teams can identify and address problems immediately, enhancing the system's dependability and maintainability.

Tools and Technologies

Testing Tools

Several tools are well-suited for testing in microservices environments:

- JUnit: A popular framework for unit testing in Java, widely used in microservices testing.

- Mockito: A framework for creating mock objects in unit tests, helping to isolate the service under test.

- WireMock: A tool for service virtualization, allowing QA teams to create mock services for testing.

- Pact: A contract testing tool that ensures services can communicate correctly with each other.

CI/CD Tools: Integrating testing into CI/CD pipelines is essential in microservices. Tools like Jenkins, CircleCI, and GitLab help automate the testing process, ensuring that services are continuously tested and deployed with minimal manual intervention.

Containerization and Orchestration: Microservices use Docker and Kubernetes extensively to manage testing environments. Teams can use Docker to establish uniform environments at various phases, and Kubernetes will manage these environments to make sure services scale and are accessible for testing.

Case Studies and Real-World Examples

Success Stories

Netflix: Microservices architecture was first introduced by Netflix. To address its enormous volume and quickly changing user needs, the organization moved from a monolithic architecture to microservices. To verify resilience, Netflix uses chaos engineering, which is a combination of extensive automated testing services, intentional failures, and real-time monitoring to guarantee high availability and performance.

Lessons Learned

- Handling Dependencies: One typical problem in microservices programming is managing dependencies across services. Contract testing and service virtualization are critical tools for addressing these issues, as companies that have successfully deployed microservices frequently stress.

- Importance of Monitoring: As demonstrated by real-world instances, microservices architecture requires effective monitoring. Assuring a dependable user experience, organizations that place a high priority on monitoring and logging are better prepared to resolve problems in production.

Conclusion

The special difficulties of testing in a distributed context necessitate a systematic approach to quality assurance in microservices architecture. QA teams may make sure that every service works properly and integrates with others by concentrating on automation, integration testing, and service-level testing. Microservices-based systems are more dependable and of higher quality when best practices like strong monitoring, CI/CD integration, and shift-left testing are used.

In the future, QA will be further shaped by developments like serverless architecture, which allows microservices to be operated without controlling the underlying infrastructure. QA teams need to be flexible and creative to address new difficulties and guarantee the delivery of high-quality software as microservices continue to expand.

About Author

Started his journey as a software tester in 2020, Rahul Patel has progressed to the position of Associate QA Team Lead at PixelQA ,a Software Testing Company.

He intends to take on more responsibilities and leadership roles and wants to stay at the forefront by adapting to the latest QA and testing practices.

_638617266084317618.webp)

_638617266084742009.webp)